学习爬虫的最初始原因是为了抓取一些想要的信息,也是为了后续学习机器学习相关内容积累一些数据处理方面的知识;废话不多说,上代码。

代码

1 | # __author__ = 'c1rew' |

代码运行环境:

Python 3.5.2 |Anaconda 4.2.0 (x86_64)

Mac mini

macOS Sierra

Version 10.12.2

如果本地运行环境不一致,可能会出现一些错误,到时候再对应调整一下,如果不用MySQL存储数据,也可以使用Redis或者Excel表格也行。

MySQL相关

命令行中使用sql语句导出时,MySQL报错了:1

The MySQL server is running with the --secure-file-priv option so it cannot execute this statemen

google了一番,发现是MySQL的配置问题,需要修改/etc/my.cnf

如果这个目录下没有这个文件,就到MySQL的安装目录下去拷贝默认的一份配置,我的Mac是在下面的路径里:

/usr/local/Cellar/mysql/5.7.17/support-files/my-defalult.cnf

拷贝过去后,在my.cnf的最后增加一句 secure_file_priv=””

接下来再使用sql语句将之前已入库的数据导出csv表格,以便后续上传到BDP做简单的数据统计分析

1 | SELECT * FROM douban_top_movie |

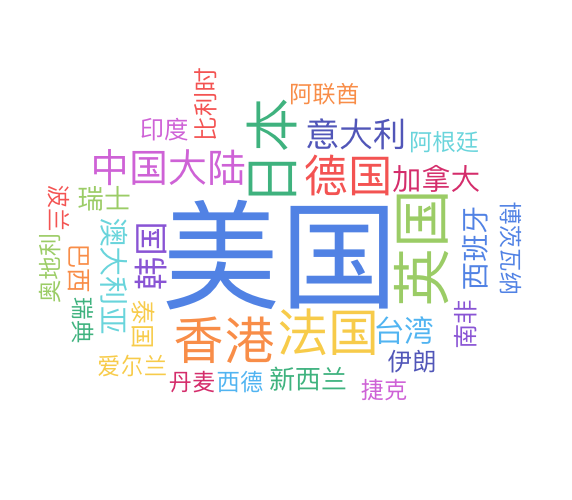

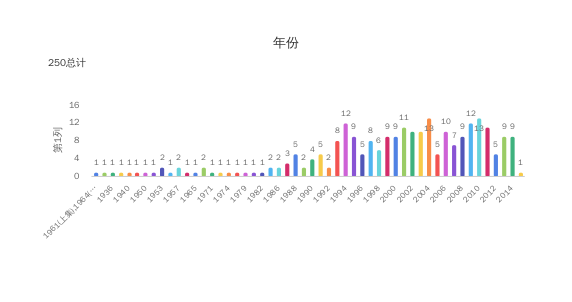

数据分析

仅仅挑选了几个比较好展示的数据,没有对类型进行分词处理,不然是可以对类型做一个饼图,这样效果是会好一些;

Reference

写这个代码的过程中,参考了一些类似资料,也是在学习Python爬虫课的过程中记录下练习过程;

推荐下七月在线的课,打折的时候还是有很多优惠:七月在线 (PS: 无利益相关哈!!)

以下是一些参考链接: